Introducing Jydoop: Fast and Sane Map-Reduce

Analyzing large data sets is hard, but it’s often way harder than it needs to be. Today, Taras Glek and I are unveiling a new data-analysis tool called jydoop. Jydoop is designed to allow engineers without any experience in HBase/Hadoop to write data analyses on their local machine and then deploy the analysis to our production Hadoop cluster. We want to enable every Mozilla engineer to use telemetry and crash-stats data as effectively as possible.

Goals

Jydoop started with three simple goals:

- Enable fast prototyping/testing

- Setting up hadoop/hbase is unreasonably difficult and time-consuming, and engineers should not need a hadoop/hbase setup in order to write an analysis. Because Jydoop analyses are written in Python, they can be developed and tested on a local machine without Hadoop or even Java.

- Don’t hide map/reduce

- In a query language like pig, it is hard to know exactly how your query will perform; which tasks will run on the map nodes, which will run on the reducers, and which must be run on the final reduced data. Jydoop doesn’t hide those details: each analysis has simple map/combine/reduce functions so that engineers know how a clustered query is going to behave.

- Be fast

- Performance of clustered queries should be as good or better than our existing solutions using pig or other existing tools.

Prototyping

Telemetry data takes the form of a single blob of JSON which is stored in an hbase table. A telemetry ping may be larger than 100kb, and we typically receive about 2 million telemetry pings per day. In order to allow engineers to test an analysis, we save off a small sample of reports (5000 or so) in a single file. Then analysis scripts can be written and tested against the sample.

The simplest job is one which simply acts as a filter. The following script is used to select all telemetry records which have an “androidANR” key and save them to a file:

import telemetryutils

# Ask telemetryutils to set up our query by date range using the correct hbase tables

setupjob = telemetryutils.setupjob

def map(key, value, context):

if value.find("androidANR") == -1:

return

context.write(key, value)

To test this script against sample data, run python FileDriver.py scripts/anr.py sampledata.txt.

To run this script against a single day of telemetry data, run make ARGS='scripts/anr.py anrresults-20130308.txt 20130308 20130308' hadoop.

More complex analyses are also possible. The following script will calculate the bucketed distribution of the size of the telemetry ping:

import telemetryutils

import jydoop

import json

setupjob = telemetryutils.setupjob

kBucketSize = float(0x400)

def map(key, value, context):

j = json.loads(value)

channel = j['info'].get('appUpdateChannel', None)

# if .info.appUpdateChannel is not present, we don't care about this record

if channel is None:

return # Don't care about this record

bucket = int(round(len(value) / kBucketSize))

context.write((channel, bucket), 1)

combine = jydoop.sumreducer

reduce = jydoop.sumreducer

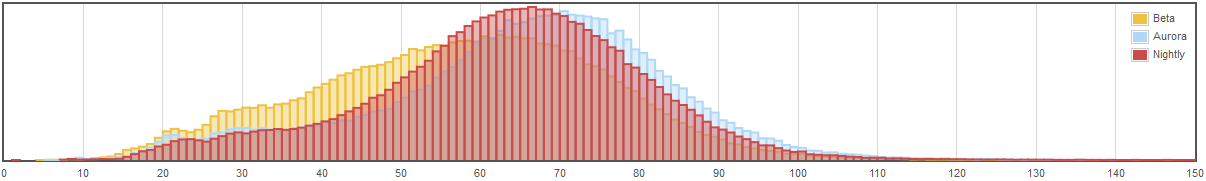

I was then able to produce this chart with the result data and a little JS:

History and Alternatives

The native language for Hadoop map/reduce jobs is Java. Writing an analysis directly in Java requires careful construction of a class hierarchy and is extremely tedious. Even more annoying, it’s basically impossible to test an analysis without having access to a hadoop/hbase environment. Even if you use something like the cloudera test VMs, setting up hbase and mapreduce jobs on test data is onerous.

It is a venerable Hadoop tradition to build wrapper tools around mapreduce which run distributed queries. There are tools such as Hadoop Streaming which allow map/reduce to forward to arbitrary programs via pipes. There are at least five different tools which already combine Hadoop with python. Unfortunately, none of these tools met the performance and prototyping requirements for Mozilla’s needs.

At Mozilla, we currently mostly use pig, a query language and execution tool which transforms a series of query and filter statements into one or more map/reduce jobs. In theory, pig has an execution mode which can prototype a query on local data. In practice, this is also very difficult to use, and so most people prototype their pig scripts directly on the production cluster. It works after a while, but it’s not especially friendly.

Performance

They key difference between Jydoop and the existing Python alternatives is that we use Jython to run the python code directly within the map and reduce jobs, rather than shelling out and communicating via pipes. This allows for much tighter integration with hadoop and also allows us to control the execution environment.

For maximum performance, we use a custom key/value class which serializes and compares python objects. For performance and sanity, this class operates on a limited subset of Python types: analysis scripts are limited to using only None, integers, floats, strings, and tuples of these basic types as their key and values.

During the process of developing Jydoop, we also discovered that JSON parsing performance was a significant factor in the overall job speed. The performance of the `json` module which is built into jython 2.7 is horrible. We got better but not great performance using jyson as a drop-in JSON replacement. Eventually we wrote a complete json replacement which wraps the standard Jackson streaming API. With these improvements in place, tt appears that jydoop performance beats pig performance on equivalent tasks.

Conclusion

Jydoop is now available on github.

We hope that jydoop will make it possible for many more people to work with telemetry, crash, and healthreport data. If you have any issues or questions about using jydoop with Mozilla data, please feel free to ask questions in mozilla.dev.platform. If you are using jydoop for other projects, feel free to file github issues, submit github PRs, or email me directly and let me know what you’re up to!

July 17th, 2013 at 2:19 pm

[…] post was increased exposure to a handy tool written by taras and bsmedberg: jydoop. Benjamin has a post explaining what it is and how it works, but I wanted to describe one specific use: measuring main-thread responsiveness issues in the […]