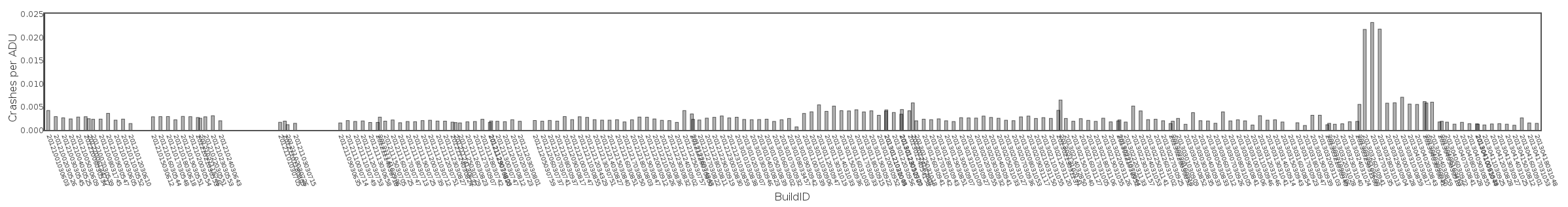

Graph of the Day: Empty Minidump Crashes Per User

Monday, April 22nd, 2013Sometimes I make a graph to confirm a theory. Sometimes it doesn’t work. This is one of those days.

I created this graph in an attempt to analyze bug 837835. In that bug, we are investigating an increase in the number of crash reports we receive which have an empty (0-byte) minidump file. We’re pretty sure that this usually happens because of an out-of-memory condition (or an out of VM space condition).

Robert Kaiser reported in the bug that he suspected two date ranges of causing the number of empty dumps to increase. Those numbers were generated by counting crashes per build date. But they were very noisy, partly because they didn’t account for the differences in user population between nightly builds.

In this graph, I attempt to account for crashes per user. This was a slightly complicated task, because it assembles information from three separate inputs:

- ADU (Active Daily Users) data is collected by Metrics. After normalizing the data, it is saved into the crash-stats raw_adu table.

- Build data is pulled into the crash-stats database by using a tool called ftpscraper and saved into the releases_raw table. Anything called “scraper” is finicky and changes to other system can break it.

- Crash data is collected directly in crash-stats and stored in the reports_clean table.

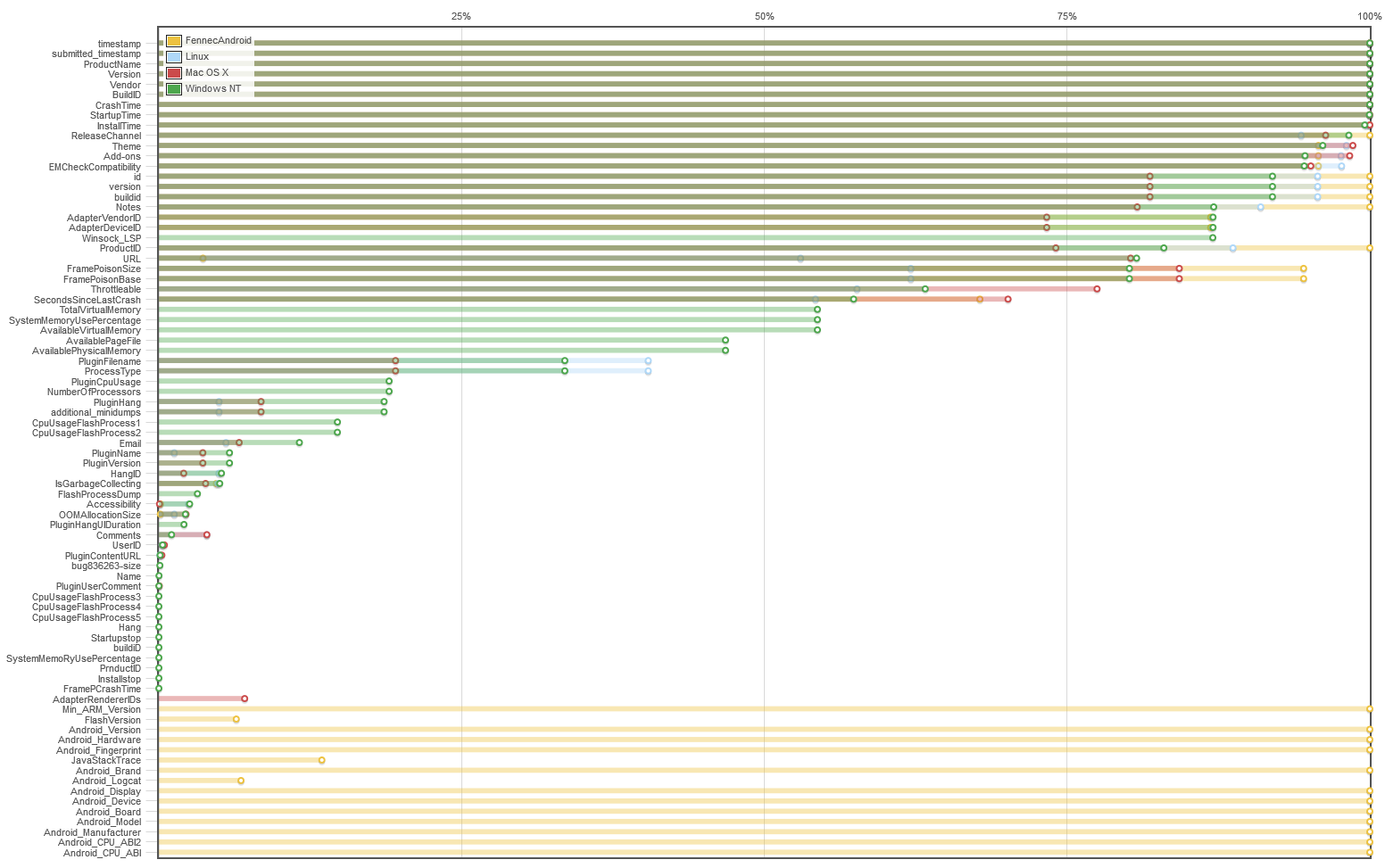

Unfortunately, each of these systems has their own way of representing build IDs, channel information, and operating systems:

| Product | Build ID | Channel | OS | |

|---|---|---|---|---|

| raw_adu | “Firefox” | string “yyyymmddhhmmss” | “nightly” | “Windows” |

| releases_raw | “firefox” | integer yyyymmddhhmmss | “Nightly” | “win32” |

| reports_clean | “Firefox” (from product_versions) | integer yyyymmddhhmmss | “Nightly” when selecting from reports_clean.release_channel, but “nightly” when selecting from reports.release_channel. | “Windows NT”, but only when a valid minidump is found: when there is an empty minidump, os_name is actually “Unknown” |

In this case, I’m only interested in the Windows data, and we can safely assuming that almost all of the empty minidump crashes occur on Windows. The script/SQL query to collect the data simply limits each data source separately and then combines them after they have been limited to windows nightly builds, users, and crashes.

This missing builds are the result of ftpscraper failure.

I’m not sure what to make of this data. It seems likely that we may have fixed part of the problem in the 2013-01-25-03-10-18 nightly. But I don’t see a distinct regression range within this time frame. Perhaps around 25-December? Of course, it could also be that the dataset is so noisy that we can’t draw any useful conclusions from it.