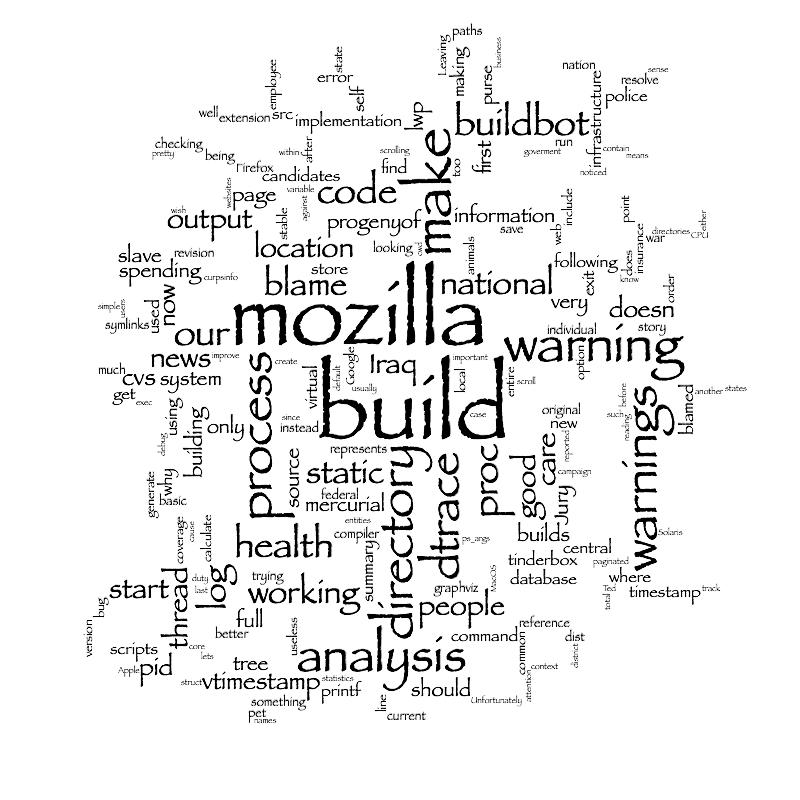

Ted and I have been looking rather closely at the performance of the Mozilla build system. In order to get a better sense of where we’re spending time, I wanted to use dtrace to get statistics on an entire build.

Basic Process Information From DTrace

In theory, the dtrace proc provider lets a system administrator watch process and thread creation for a tree of processes. Using normal dtrace globals, you can track the process parent, arguments, working directory, and other information:

/* progenyof($1) lets us trace any subprocess of a specific process, in this case the shell from

which we launch the build */

proc:::create

/progenyof($1)/

{

printf("FORKED\t%i\t%i\t%i\n", timestamp, pid, args[0]->pr_pid);

}

proc:::exec

/progenyof($1)/

{

printf("EXEC\t%i\t%i\t%s\t%s\n", timestamp, pid, curpsinfo->ps_args, cwd);

}

proc:::exit

/progenyof($1)]

{

printf("EXIT\t%i\t%i\n", timestamp, pid);

}

Unfortunately, the MacOS implementation of dtrace doesn’t reflect information very well:

- curpsinfo->ps_args doesn’t contain the entire command-line of the process; it only contains the first word

- cwd doesn’t contain the entire working directory /builds/mddepend/ff-debug but only the last component ff-debug. Since many of our directories within the tree share names such as src and public, the information is pretty much useless.

Process CPU Time in DTrace

Dtrace doesn’t give scripts a simple way to track the CPU time used by a process: the kernel psinfo_t struct does have a pr_time member, but this is of non-reflected struct timestruc_t.

There is another way to calculate this: dtrace exposes a variable vtimestamp which represents, for each thread, a virtual timestamp when that thread was executing. By subtracting the vtimestamp at proc:::lwp-start from the vtimestamp at proc:::lwp-exit you can calculate the time spent in each thread, and use sums to calculate the per-process total.

proc:::lwp-start

/progenyof($1)/

{

self->start = vtimestamp;

}

proc:::lwp-exit

/self->start/

{

@[pid] = sum(vtimestamp - self->start);

self->start = 0;

}

END

{

printf("%-12s %-20s\n", "PID", "TIME");

printa("%-12i %@i\n", @);

}

Unfortunately, the MacOS implementation of DTrace has a serious bug in the implementation of proc:::lwp-start: it isn’t fired in the context of the thread that’s being started, but in the context of the thread (and process!) that created the thread. This means that the pid and vtimestamp reported in the probe are useless. I have filed this with Apple as radar 6386219.

Summary

Overall, the bugs in the Apple implementation of DTrace make it pretty much useless for doing the build system profiling I intended. I am now trying to get an OpenSolaris virtual machine up for building, since I know that DTrace is not broken on Solaris; but never having used Solaris before, I’ll save that story for another day.